The concise reference to

the

Ublu

Midrange and Mainframe

Life Cycle Extension Language

An extensible object-disoriented

interpretive language for midrange and mainframe remote

system programming

|

|

Copyright © 2019, 2022

Jack. J. Woehr

jwoehr@softwoehr.com

All Rights Reserved

SoftWoehr LLC

http://www.softwoehr.com

PO Box 82

Beulah, CO 81023-0082

USA |

Author: Jack J. Woehr

Original date: 2013-07-11

Last edit: 2024-11-20

Copyright © 2015, Absolute Performance, Inc.

http://www.absolute-performance.com

Copyright © 2016, 2022, Jack J. Woehr

jwoehr@softwoehr.com http://www.softwoehr.com

All rights reserved.

Redistribution and use in source and binary forms, with or without

modification, are permitted provided that the following conditions are met:

* Redistributions of source code must retain the above copyright notice, this

list of conditions and the following disclaimer.

* Redistributions in binary form must reproduce the above copyright notice, this

list of conditions and the following disclaimer in the documentation and/or

other materials provided with the distribution.

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND

ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED

WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR

ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES

(INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES;

LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON

ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

(INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS

SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

Table of Contents

Overview

Ublu is a tool

for ad-hoc process automation primarily aimed at IBM i

®.

The Java command line java -jar ublu.jar [args

...] invokes an extensible object-disoriented interpretive

language named Ublu intended for interaction between any Java

platform (Java 9 and above) on the one hand, and

IBM i ® 7.1 and above) and/or IBM z/VM ® SMAPI operations

programming on the other. The language is multithreaded and offers a client-server mode. It also possesses a

built-in single-step debugger.

"Any Java platform (Java 9 and above)"

includes running natively on IBM i. When executing Ublu

interpretively on IBM i, a character set including the

@ sign must be set, e.g., CCSID

37.

- Access to the IBM i server is implemented via the

JTOpen library.

- Access to z/VM SMAPI is provided via the PigIron library (see the

smapi command).

- Interoperation between the Db2 for IBM i database,

Postgresql open

source database, and others is provided (see the db command).

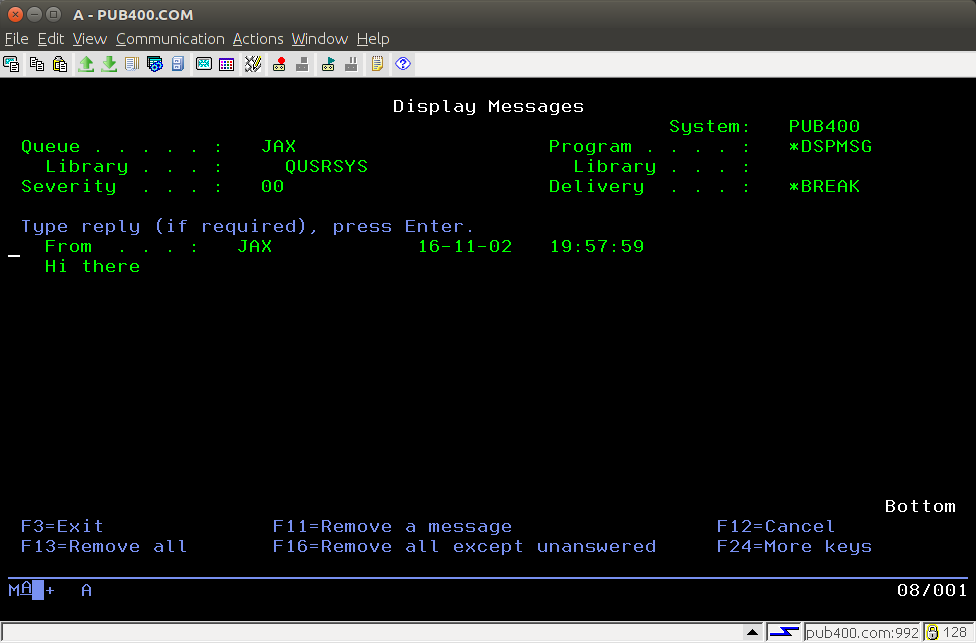

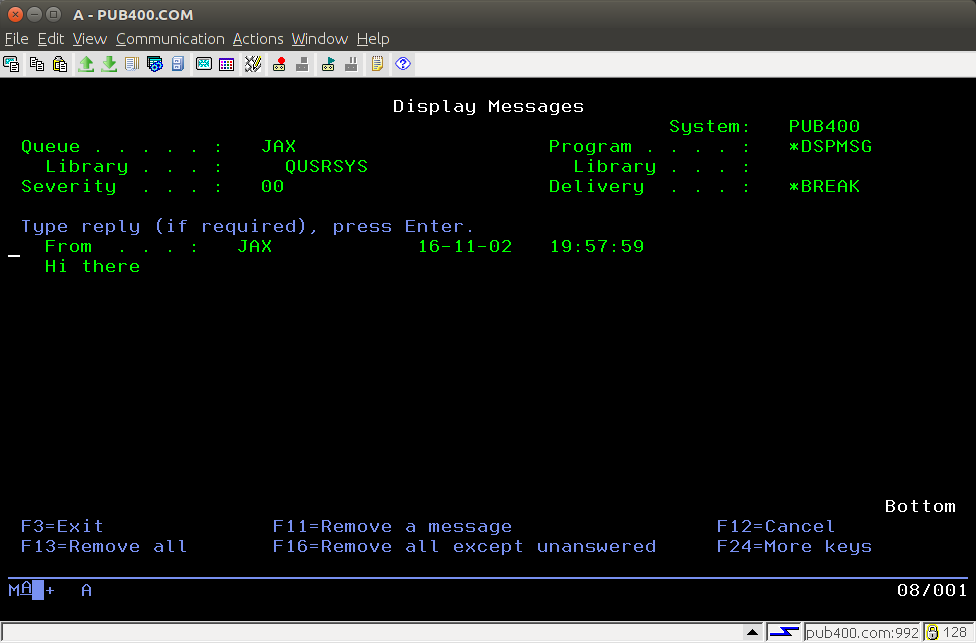

- Terminal "screen-scraping" automation is provided via

tn5250j (see the

tn5250 command).

Other open source software is used to provide other

facilities, see the usage

command and license files in the root directory of the

distribution.

"Object-disoriented"

means that Ublu is a procedural language with function

definitions which is implemented on top of object-oriented Java

libraries. Ublu manipulates objects constructed in the underlying

environment without forcing the user to know very much about the

object architecture. By way of analogy, consider how resources in

a hierarchical network environment are flattened out for ease of

use by

LDAP. In a similar fashion, Ublu conceals many details while

still providing complete access should a program require it,

e.g., via the calljava

command.

Ublu operates in four (4) modes:

- as a command-line utility

- as an interpreter

- as a text file program compiler/executor via the

include command

- As a TCP/IP port listening server

In any mode, Ublu reads a line and then consumes one or more

commands and their arguments and attempts

to execute them. In certain cases, text parsing crosses line

boundaries, e.g., when a quoted

string or execution block

extends for multiple lines.

Ublu provides the command FUNC to define

functions with argument lists which can later be invoked with

appropriate arguments.

Commands and command features are steadily being added to the

repertoire of Ublu. Suggestions for added commands or extensions

to extant commands are welcome and should be made via whatever

ticketing system is present on the site where Ublu is

distributed.

Each Ublu release is distributed as an archive

ublu-dist.zip containing

-

ublu.jar

- a

bin directory with a launcher script for

Ublu

- the

examples directory

- the

extensions directory

- extensions to the base Ublu system written in Ublu

itself

- documentation in the

userdoc directory

- licenses

- various useful items in the

share

directory

We recommend the following layout:

/opt/ublu/ublu.jar

/opt/ublu/examples

/opt/ublu/extensions

... etc.

Then define a function for your login shell:

-

bash

function ublu () { java -jar /opt/ublu/ublu.jar

$* }

-

ksh

function ublu { java -jar /opt/ublu/ublu.jar $*;

}

or use the script bin/ublu .

For more on installation, see the Ublu Guide : A Typical

Installation

See instructions in the

Ublu Guide.

It can be a good idea to download the source

archive for a release or check out the current development

version via from

https://github.com/jwoehr/ublu.git

When you have the source tree, change directory to the top

level and choose a Maven target (typically, mvn package) or

execute make clean dist as there is a

Makefile in the base directory of the source to

invoke Maven for you. The output will be in the

target directory.

Invocation

You can invoke Ublu using the java command on your

system.

Ublu invocation: java [ java options .. ] -jar

ublu.jar [ ublu options .. ] [ ublu commands .. ]

Ublu options:

-i filename [-i filename ..] include all indicated source

files

-s if including, include silently, otherwise startup

interpreter silently

-t [filename, --] open history file filename or default if

--

-h display help and then exit

-v display version info and then exit

-w [properties_file_path] [ ublu commands .. ] starts Ublu in a

GUI window which reads from the properties file path provided

processing no other options, interprets any commands

provided then finally waits for more input or menu

selections.

Note: SSL support for connections to the

server requires a slightly different invocation and is

discussed here.

bin/ublu is a bash/ksh invocation script.

ublu --help will tell you what you need to know

about invoking Ublu in this manner.

./ublu --help

Ublu is free open source software with NO WARRANTY and NO GUARANTEE, including as regards fitness for any application.

See the file LICENSE you should have received with the Ublu distribution.

This bash/ksh shell script ./ublu starts Ublu if Ublu is installed in a standard fashion in /opt/ublu/

If Ublu is installed elsewhere, use the -u ubluclasspath switch to point this script to the Ublu components.

Usage: [CLASSPATH=whatever:...] ./ublu [-Xopt ...] [-Dprop=val ...] [-u ubluclasspath] [-w [propertiesfile]] [--] [arg arg ..]

where:

-h | --help display this help message and exit 0

-X xOpt pass a -X option to the JVM (can be used multiple times)

-D some.property="some value" pass a property to the JVM (can be used multiple times)

-u ubluclasspath change Ublu's own classpath (default /opt/ublu/ublu.jar)

-w propertiesfile launch Ublu in windowing mode with properties from propertiesfile

... If -w is present, it must be last option.

... If present, must be followed by a properties file path or no more arguments accepted.

... A default share/ubluwin.properties is included with the Ublu distribution.

-- ends option processing

... Must be used if next following Ublu argument starts with dash (-)

[arg arg ...] commands to Ublu

If there is an extant CLASSPATH, the classpath for Ublu is that path postpended to Ublu's classpath.

Exit code is the result of execution, or 0 for -h | --help, or 2 if there is an error in processing options.

On options error, this usage message is issued to stderr instead of to stdout.

Copyright (C) 2018 Jack J. Woehr https://github.com/jwoehr/ublu jwoehr@softwoehr.com

Note that these options are slightly different from the

options used when invoking directly from

Java.

- Ublu commences by initializing the main interpreter and processing the

arguments to the invocation.

- Any arguments starting with the dash character (

-, e.g., -s ) are taken to be options

to the Ublu invocation. Options may have objects, e.g.,

-i myfile.ublu which means "include

myfile.ublu"

- If an option starting with the dash character has

no object and is followed by a regular argument not

meant to be its object, the option should be followed

by two dashes -- so the following argument is not taken

for the object of that option, e.g.

-i

myfile.ublu -s -- put ${ some string }$

- The first option which is simply

--

terminates option processing. All further elements are of

the command line are taken to be input to the Ublu

interpreter.

- The first non-option seen in the invocation command

line terminates option processing. All further elements are

of the command line are taken to be input to the Ublu

interpreter.

- Each argument after option processing terminates on

-- or on the first non-option is passed in

order to the initial input line of the interpreter.

- The options to Ublu invocation are as follows:

-

-s by itself means "silent, no introductory

greeting". When -include or -i

is also present, it has another meaning (described

below).

- Another way to avoid the introductory greeting

message when invoking in interpret mode is to append

the single command

interpret to the

invocation command line. This nests an interpreter and

thus the main interpreter has not yet completed the

initial command line.

-i filename includes an Ublu source file. Any

number of files may be included in order in this fashion.

If the -s is also present anywhere in the

options portion of the invocation command line, then all -i

includes will be silent. After all includes, the rest of

the command line will be parsed and executed as Ublu

commands by the main interpreter, after which the main

interpreter will take normal interactive input.-t [ historyfilename ] sets the Ublu

history mechanism on, either on the default history

filename, or on the optional argument to the

-toption.-h displays Ublu information and

invocation help and exits 0.- There is a "hidden" switch

-g which is

passed to Ublu by the experimental enhanced Ublu console

Goublu to tell Ublu it is running under Goublu. This -g

switch should not be invoked manually by the user.

- When invoked with no non-option command line arguments, all

options are processed first.

- If one or more

-i includefilename options

are present, Ublu performs all includes, then prompts and

awaits interactive input.

- If no

-i includefilename options are

present, Ublu prints to standard error information about

the build including time/date stamp, open source usage and

copyright and then the main interpreter prompts and awaits

interactive input.

- When invoked with non-option arguments and none of the

options were

-i, Ublu will execute the arguments

as if one line of commands were issued to the main interpreter

and then exit.

- To continue in interpretive mode after invoking Ublu

with a command line including arguments, make the last

argument the command

interpret . This nests an

interpreter and thus the main interpreter has not yet

completed the initial command line.

- Ublu upon exit returns a result code indicating the success

of the last command that Ublu processed.

- 0 is SUCCESS

- 1 is FAILURE

Ublu 1.2.2 build of 2020-03-28 08:17:37

Author: Jack J. Woehr.

Copyright 2015, Absolute Performance, Inc., http://www.absolute-performance.com

Copyright 2017, Jack J. Woehr, http://www.softwoehr.com

All Rights Reserved

Ublu is Open Source Software under the BSD 2-clause license.

THERE IS NO WARRANTY and NO GUARANTEE OF CORRECTNESS NOR APPLICABILITY.

***

Running under Java 1.8.0_152

Ublu utilizes the following open source projects:

IBM Toolbox for Java:

Open Source Software, JTOpen 9.6, codebase 5770-SS1 V7R3M0.00 built=20181007 @X2

Supports JDBC version 4.0

Toolbox driver version 11.6

---

Postgresql JDBC Driver - JDBC 4.1 42.1.4.jre7 (42.2)

Copyright (c) 1997, PostgreSQL Global Development Group

All rights reserved http://www.postgresql.org

---

tn5250j http://tn5250j.sourceforge.net/

NO WARRANTY (GPL) see the file tn5250_LICENSE

---

SBLIM CIM Client for Java HEAD 2017-01-28 18:34:31

http://sblim.cvs.sourceforge.net/viewvc/sblim/jsr48-client/

Copyright (C) IBM Corp. 2005, 2014

Eclipse Public License https://opensource.org/licenses/eclipse-1.0.php

---

PigIron 0.9.7+ http://pigiron.sourceforge.net

Copyright (c) 2008-2016 Jack J. Woehr, PO Box 51, Golden CO 80402 USA

All Rights Reserved

---

org.json

Copyright (c) 2002 JSON.org

***

Type help for help. Type license for license. Type bye to exit.

>

To exit the system, type bye .

Some minimal cleanup will be performed.

[Ctrl-D] is effectively the same as bye

.

If you must force exit, type exit . No cleanup beyond that provided by Java

itself will be performed.

[Ctrl-C] is effectively the same as

exit .

Memory requirements

Ublu ordinarily runs well with default Java memory values.

However, in performing database operations on large databases one

may be forced to boost the heap allocation considerably on

invocation, e.g.

java -Xms4g -Xmx4g -jar

/opt/ublu/ublu.jar ...

which allocates 4 gigabytes.

Interpreter

As an interpreter, one or more commands with their arguments

can be issued on a line. The commands will be executed in

sequence. If any command fails, generally the interpreter will

abandon the rest of the commands on the line and return to the

prompt. The interpreter mode prompt is a right-arrow (

> ) but will have a number to the left of it

(e.g., 1>) if in a nested interpreter

sublevel.

Nested interpreter

sublevel

The interpret command

can be used to enter a nested interpreter sublevel, an

interpreter nested within the previous level of

interpreter.

State is inherited by a nested interpreter sublevel from the

previous level of interpreter.

Some state persists in the previous level of interpreter when

the nested interpreter sublevel ends and some is lost.

When the sublevel ends (via bye or Ctl-D),

-

FUNC definitions made in the

sublevel persist.

-

Tuple definitions made in the

sublevel persist.

-

Constant definitions made in the

sublevel are lost.

Main

interpreter

The instance of the interpreter presented to the user upon

program invocation is called the main

interpreter to distinguish it from nested interpreter sublevels

and from (possibly multiple) interpreter instances created in

server mode.

The Ublu interpreter by default depends upon the Java console.

Java console support is minimal on most platforms. A better front

end console for Ublu called Goublu has been coded in

the Go language and can be downloaded and built if you have the

Go language installed for your

platform.

Server mode

Ublu can launch multiple TCP/IP port servers that accept

connections and bind them to individual interpreter sessions. This allows remote

applications such as web applications to execute Ublu commands

and receive their output. The default listener port is 43860. See

the server command.

The server command can also be used to interpret

a single execution block for each

connection and then disconnect at the end of interpreting the

block. This allows the user to connect to a "canned program"

instead of gaining access to the full interpreter.

Commandline

Invoked as a command line application with or more commands

following the invocation on the shell

command line, the commands and their arguments are processed as

in the interpreter, then the application exits. If one of the

commands is interpret, then

the application continues to run until that interpreter exits

when that interpreter encounters the bye or exit

command. exit always exits, but bye

only unnests one interpreter level. If that interpreter is the

main interpreter, Ublu

exits.

Input

Input for the main interpreter

can come from the console or from standard input when Ublu is

invoked using some form of shell redirection, e.g., shell pipes (

| ) or "here documents" ( <<

).

Output

In interpreter and commandline mode, command output of Ublu is

written to standard out, with the exception of the following

which are written to standard error:

- error and exception mesages

- some user informational messages which appear in

interpretive mode, such as the initial version message,

copyright message

- all help and usage messages

- the prompt

- When interpreter input comes from standard in,

prompting is suppressed.

- When running under Goublu, the

prompt is to standard out.

- In server mode, input comes

from the network socket and prompting is suppressed.

When automating system tasks, it may be helpful to redirect

stderr from the shell command line via

2>/dev/null to discard miscellaneous interpreter

informational output. This, however, discards error messages as

well.

In server mode, Ublu standard out

is connected the network socket. However, Ublu standard error

output remains attached to the invoking interpreter's standard

error. This makes the main

interpreter a monitor of errors occurring in server

threads.

Executing commands from a

file

Interpretation of a text file of commands and functions

is performed via the command include.

Launch your

application from a shell script autogenerated via

gensh

Ublu offers a command gensh

which autogenerates a shell script to process arguments to your

custom function via command line switches and invoke your custom

function with those arguments. This is the Ublu model for runtime

program delivery.

Debugger

The dbug command provides interactive

single-step debugging of an execution

block.

Parsing and syntax

Parsing and syntax are simplistic.

Input is parsed left-to-right, with no lookback. Each sequence

of non-whitespace characters separated from other non-whitespace

by at least one whitespace character is parsed as an element. No

extra whitespace is preserved in parsing, not even within

quoted strings (with the important

exception that a quoted string is always returned with a blank

space as the last character).

Note that the simplistic parser imposes one

particularly arbitrary limitation in that within an execution block neither the block opener

$[ nor the block closer ]$ are allowed

to appear inside a quoted string. If you need to have those

symbols in a quoted string, the limitation is easy to get around,

as follows:

put -to @foo ${ $[ }$

put

-to @bar ${ $] }$

FOR @i in @foo

$[ put -from @i ]$

$[

FOR @i in @bar

$[ put -from @i ]$

$]

Quoted

strings

Quoting of strings is achieved by placing the string between

two elements ${ and

}$ . The open and close elements of

a quoted string must each be separated by at least one space from

the contents of the string and from any leading or following

commands or arguments.

The string command offers

many manipulations of strings to get what you want.

Whitespace between non-whitespace elements of the string is

compacted into single spaces ${ This is

a quoted string.

}$ represents This is a quoted string. A

quoted string is always returned by the parser with a blank space

at the end of it.

A quoted string can span multiple lines. When end-of-line is

reached in the interpreter after the open-quote glyph (

${ ) without finding the close-quote glyph (

}$ ), then string parsing continues and the prompt

changes for any lines following until the close-quote glyph is

encountered. The string parsing continuation prompt is the

open-quote glyph surrounded in parentheses (${)

.

Note that the simplistic parser imposes one

particularly arbitrary limitation in that within an execution block neither the block opener

$[ nor the block closer ]$ are allowed

to appear inside a quoted string. If you need to have those

symbols in a quoted string, the limitation is easy to get around,

as follows:

put -to @foo ${ $[ }$

put

-to @bar ${ $] }$

FOR @i in @foo

$[ put -from @i ]$

$[

FOR @i in @bar

$[ put -from @i ]$

$]

Tuple

variables

A tuple variable is a autoinstancing name-value pair that

is either globally accessible within the given interpreter

session or made local to a

function definition via the LOCAL command. Tuples are always

referenced with a "@" as the first character of their name, e.g.,

@session.

You can direct any kind of object to a tuple with the

-to @tuplename dash-command (adjunct to commands which

support that) and retrieve the value with -from

@tuplename (adjunct to commands which support

that).

You can assign an object from one to another tuple or from the

tuple stack to the stack or to a tuple with tuple

-assign feature.

Tuples can be directly manipulated via the put and tuple commands.

Tuple

naming

Tuple variables must be named with one (1) @

character followed by letters, numbers, and underscores in any

combination, length, and order. You might see in the debugger a

tuple name of the form @///19 which denotes a

temporary variable used in function argument binding. Currently

protection from creating illegal tuple names is not enforced by

the interpreter. Unpredictable results can occur

if you use illegal tuple variable names.

The Ublu interpreter starts with certain tuples already

defined, as shown in the chart below:

| name

|

value

|

notes

|

@true

|

true

|

can be used for conditionals, etc. |

@false

|

false

|

can be used for conditionals, etc. |

These defaults are not constant and can be overwritten at

runtime via normal tuple manipulation.

Tuple autoinstancing

A tuple springs into being in the global tuple map when a

previously unused tuple name is used as a destination datasink or as a parameter (input or

output) to a function. When

autoinstanced in this fashion, the new tuple's value is

null. Alternatively, you can create a tuple variable

using the tuple command, e.g. tuple -true @foo will

set @foo to true if it exists already,

or will create @foo and set it to true

if it does not yet exist.

Tuple stack

The system maintains a Last In, First Out (LIFO) stack of tuple

variables for programming convenience manipulated via the

lifo command.

A command and its dash-commands that expect tuple arguments can

also take the argument

~ ("tilde")

which signifies "pop the tuple from the tuple stack". An error

will result if the stack is empty.

When a command references a source or destination datasink via the -to or

-from dash-commands, that datasink may also be

~ ("tilde"),

meaning the source or destination is the tuple stack. Non-tuples,

e.g, strings put to a destination datasink via -to ~

in this fashion are automatically wrapped in an anonymous tuple.

Hence the following session:

> put -to ~ ${ but not a clever test }$

> put -to ~ ${ this is a test }$

> string -to ~ -cat ~ ~

> put ~

this is a test but not a clever test

Autonomic Tuple Variables

If the interpreter encounters a tuple variable or the tuple

stack pop symbol ~ (tilde) when it is expecting a

command, it checks the value of that

variable. If the value is of a class in the list of autonomes,

that is, classes whose instances are generally passed as the

argument to the eponymous

dash-command of a specific Ublu command, that command is

invoked with tuple as the eponymous argument, along with any

dash-commands and/or arguments which

follow. If the variable is not autonomic, the interpreter reports

an error.

Thus, the third, fourth and sixth commands of the following

example using ifs to get the size of a file in

the Integrated File System are equivalent:

> as400 -to @mysys MYSYS.com myid mYpAssWoRd

> ifs -to @f -as400 @mysys -file /home/myid/.profile

> ifs -- @f -size

56

> @f -size

56

> lifo -push @f

> ~ -size

56

Autonomic tuple variables offer a useful object-disoriented shorthand which,

along with tuple stack wizardry, one

should consider avoiding in larger programs in the interest of

clarity.

- To test whether the tuple variable

@foo is

autonomic, use tuple -autonomic

@foo

- To display whether the tuple variable

@foo is

autonomic, use tuple -autonome

@foo

- To display a list of all autonomic classes and the Ublu

commands they invoke, use

tuple -autonomes

Numbers

Numbers are signed integers and generally can be input as

decimal, hex (0x00), or octal (000). See also the num command.

Plainwords

Any whitespace-delimited sequence of non-whitespace characters

provided as an argument to a command or dash-command or

function is a plainword. A plainword can be used in most

cases to represent a number or a single whitespace-delimited

textual item where a tuple

variable or quoted string would

have to be used to contain longer whitespace-including strings of

text.

Constants

Constants are created via the const

command. Constants have a string value. The name of a constant

has the form *somename and can be used as the

argument to a command or dash-command where the syntax notation

represent the argument as ~@{something} and only in

such position. Constants are not expanded within quoted strings.

Constants cannot be used as the argument to a -from

or -to dash-command. Plainwords resembling constants, i.e., starting

with an asterisk * are not mistaken for constants if they have

not been defined as such.

Constants defined in an interpreter level appear in its

nested interpreter

sublevels. However, constants defined in nested interpreter

sublevels do not persist into the previous interpreter level.

Execution blocks

An execution block is a body of commands

enclosed between the block opener $[ and the block

closer ]$ . Execution blocks are used in functor declarations (callable routines) via the

FUN command and with conditional

control flow commands such as FOR

and IF - THEN -

ELSE to express the limit of a code phrase in a

condition.

IF @varname THEN $[ command command .. ]$ ELSE $[

command command ]$

is a generalized example of block usage.

An execution block can contain local variable declarations and their use.

A local variable declaration hides identically named variables

from the global context and from any enclosing block. Inner

blocks to the declaring block have access to the locals in

enclosing blocks, unless, of course, an identically named

variable has been declared local to the enclosed block.

Note that the "comment-to-end-of-line"

command # should

not ever be used in an execution block! An execution block is

treated as one command line, so the comment command will devour

the rest of the block.

Note that the simplistic parser imposes one

particularly arbitrary limitation in that within an execution block neither the block opener

$[ nor the block closer ]$ are allowed

to appear inside a quoted string. If you need to have those

symbols in a quoted string, the limitation is easy to get around,

as follows:

put -to @foo ${ $[ }$

put

-to @bar ${ $] }$

FOR @i in @foo

$[ put -from @i ]$

$[

FOR @i in @bar

$[ put -from @i ]$

$]

An execution block may span several lines, however, the

opening bracket ( $[ ) of the block must

appear on the same line with and directly after the conditional

control flow command operating upon it.

Execution blocks may be nested.

In the Tips and Tricks section

of this document is an example which will get a list of active

interactive jobs and search that list for specific

jobs.

Local

variables

An execution block can have local tuple variables declared via the LOCAL command whose names hide

variables of the same name which may exist outside the execution

block. Locals disappear at the end of the block in which they are

declared.

Local variables can be used safely even when a global tuple

variable coincidentally of the same name is passed in as a

function argument; no collision results, and both the local and

the function argument can be referenced.

Example

FUNC foo ( a ) $[

LOCAL @a

put -to @a ${ inner @a

}$

put -n -s ${ outer a: }$ put -from

@@a

put -n -s

${ local a: }$ put -from @a

]$

put -to @a ${ outer @a

}$

foo ( @a

)

outer a: outer

@a

local

a: inner @a

Functors

A functors is an anonymous execution block created via FUN which can then be stored in tuple variable and invoked via CALL and/or associated with a name entry

in the function dictionary via defun. Arguments can be passed to the

functor. Arguments are call-by-reference; the resolution of these

arguments is discussed under Function Parameter

Binding.

Functions

A function is a functor associated

with a name entry in the function

dictionary, usually via FUNC

but also via the combination of FUN and defun.

The function dictionary is searched after the list of built-in

commands. Dictionaries can be listed, saved, restored and merged

via the dict command. Arguments

can be passed to the block. All arguments are passed by

reference, i.e., passing a tuple

variable to a function argument list passes the tuple itself,

not the tuple's value, and any alteration of the argument alters

the tuple referred to in the argument list. The resolution of

these arguments is discussed under Function Parameter Binding.

> FUNC yadda ( a ) $[ FOR @word in @@a

$[ put -n -s -from @word put ${ yadda-yadda ... }$ ]$

]$

> put -to @words ${

this that t'other }$

> yadda (

@words )

this yadda-yadda

...

that

yadda-yadda ...

t'other yadda-yadda ...

> dict -list

# yadda

ublu.util.Functor@1d4b0e9

FUNC yadda ( a ) $[ FOR @word in @@a $[ put -n -s -from

@word put ${ yadda-yadda ... }$ ]$ ]$

> dict -save -to mydict

> FUNC -delete yadda

>

dict -list

>

dict -restore -from mydict

>

dict -list

# yadda

ublu.util.Functor@14fd510

FUNC yadda ( a ) $[ FOR @word in @@a $[ put -n -s -from

@word put ${ yadda-yadda ... }$ ]$ ]$

See also the FUN and

defun commands.

Function Parameter Binding

Ublu's interpreter being purely a text interpreter, performing

(almost) no tokenization during interpretation and compilation,

function parameter binding is effected by runtime rewriting

("token pasting") of argument references (e.g.,

@@some_arg) in the execution block to the actual

positional parameter value provided at the time the function is

called.

Arguments passed to a function or functor can be

Arguments other than blocks or quoted strings are handled as

follows:

- If the argument provided is a plainword, e.g.,

foo or 1234, or a *const, that text is substituted uncritically at

runtime for all references to that argument in the function

body.

-

CAUTION: The "uncritical

substitution" of arguments is performed via regular

expression substitution. Thus, if a string or plainword

containing a regular expression metacharacter is provided

in the arguments to a function, this will cause the

substitution to fail.

So if an argument contains, for instance, the

character $ you should

either escape it (e.g., FOO\$ instead of

FOO$) or instead provide the argument in a

@variable, rather than attempting to pass a string or

plainword.

- If the argument provided is a tuple variable, a temporary alias for

the tuple variable named in the invocation argument is created

in a local extension to the tuple map and references to the

argument are rewritten with the name of the temporary in the

function's execution block.

- If the argument is the tuple stack pop symbol

~ that symbol will appear at runtime wherever the

argument is substituted in the function body.

Thus, in the case of a function

foo ( a b c ) $[ put @@a put @@b put @@c ]$

called with arguments

foo ( @bar woof @zotz )

is effectively seen at invocation by the interpreter as

$[ put @bar put woof put @zotz ]$

The temporary alias actually pasted for tuple variable

arguments to functions can be seen in the debugger.

It is perfectly acceptable to name function parameters with

the same names as command or functions. But this practice can

detract from the readability of the code, especially if using the

syntax coloring edit

modes provided with Ublu.

Function Dictionary

Function definitions are stored in the function

dictionary.

Interpreter instances launched by

the interpret command

inherit the current function dictionary. Any additions within an

interpreter instance are lost when the instance exits back to its

parent interpreter instance.

You can view the current function dictionary or save it to a

file or tuple variable and later

restore it or merge it with the current dictionary. See the

dict and savesys commands.

User ID and password

You supply a user ID and a password in the argument list for any

Ublu command which accesses an AS/400 (iSeries, System/i) host,

or for creating an instance of the host using the as400 command so that you can employ

the -as400 dash-command with

subsequent commands in lieu of constant repetition of the system

name, userid and password. On system operations, if the user ID

or password is incorrect, you will be prompted to enter the

correct user ID and/or password, upon completion of which the

command will proceed.

> joblist testsys frrd oopswrong

Please enter a valid userid for testsys: fred

Please enter a valid password for testsys (will not

echo):

000000/QSYS/SCPF

000736/QSYS/QSYSARB

000737/QSYS/QSYSARB2

... etc.

The behavior of Ublu when a signon attempt fails can be modified.

See the as400 and props commands for details.

DB400 database operations behave differently with regard to an

incorrect userid or password. Unlike the JTOpen systems operation

code, the JTOpen JDBC driver does not provide a programmable exit

for application code to handle an incorrect userid or password,

and instead handles the exception itself by attempting to launch

a 1990's-style Java AWT window prompting for userid and password.

If your environment supports a GUI, all is well: you can supply

the correct userid and password. On the other hand, if your

environment does not support a GUI, then the operation fails and

a confusing exception is thrown complaining about the absence of

a windowing system. You can avoid this windowing behavior and

just allow the operation to fail on incorrect userid/password

with an understandable exception by adding the following

connection property dash-command to

the string of dash-commands for the db command:

-property prompt false

which adds ;prompt=false to the URL for the JDBC

connection and disables the windowing password prompt.

Commands

Commands are the verbs of Ublu. Some have only language

meaning, but the most important commands operate directly upon a

host system affecting its data and operation. Be sure you

understand what you are doing when you use an Ublu

command!

Access to IBM i hosts is provided through IBM's open source

JTOpen

library.

Access to z/VM SMAPI is provided through the author's open

source PigIron

library.

Command Structure

Commands are conceptually structured in three parts

- command

- dash-commands

- arguments

Not all commands have dash-commands. Not all commands take

arguments. Usually they take one or the other. The general order

of the three parts is as follows:

command [dash-command dash-command-argument

[dash-command-argument dash-command-argument ...] ]

command-argument [command-argument ...]

Where dash-commands are give in

square brackets, e.g., [-foo ~@{bazz}] the

dash-command is optional and not required.

In this documentation, square brackets and ellipses are used

to describe the command structure. Those square brackets and

ellipses are not part of the syntax of Ublu, merely documentation

notation. See the examples given in the documentation and in the

examples directory in the distribution.

In this documentation, where multiple dash-commands are

enclosed collectively in a outer pair of square brackets and

individually enclosed in square brackets and the bracketed

dash-commands separated by the .OR. bar ( | ), e.g., then the

dash-commands are a set of mutually exclusive optional

dash-commands, e.g., [[-foo ~@{bazz}] | [-arf

~@{woof}]]

Where square brackets are missing from a dash-command

description, the dash-command, or one of the alternative of a

series of dash-commands separated in the description by the .OR.

bar ( | ) is required.

Some dash-commands are required in some contexts, and not in

others. Such cases are explained in the explanatory text for the

command.

Command

A command is a one-word command name. It is the first

element of any Ublu command invocation.

Dash

Command

A dash-command is a modifier to the command, itself

often possessing an argument or string of arguments.

All dash-commands with their arguments must appear on the same

line with the command, except that a quoted string or block argument to a command or

dash-command, once started, may span line breaks, thus extending

a command over two or more lines.

If dash-commands specify conflicting operations, the last

dash-command encountered in command processing is the operative

choice.

Often one dash-command is actually the default operation for

the command, so that if no dash-command is provided, this default

provides the operation of the command anyway. These defaults are

noted in the command descriptions.

Eponymous dash-command (

-- )

Many commands are used to create objects of various kinds and

store them in tuple variables.

Later, these same command operate on these same objects which

they themselves have created. This sort of command references the

tuple containing such an object via an eponymous dash-command,

e.g, job -job @some_job etc. This eponymous

dash-command can generally be replaced by --

instead, so the example just given could equally be written

job -- @some_job etc.

Order of Dash Commands

Often the order in which dash-commands appear on a line does

not matter, but sometimes it does. To be safe, dash commands

should generally follow the command in this order:

- the eponymous

dash-command or the one representing the object being

operated upon

- the two data sink dash-commands,

-from and -to , if used

- any other dash-commands

There are exceptions to this ordering, e.g, the eponymous

dash-command must come last for the dbug command.

Argument

An argument is the object or, for multiple arguments,

list of objects necessary for command execution.

Commands may have arguments, and their dash-commands may also

have their own arguments.

All arguments to a command or dash-command must appear on the

same line as the command or dash-command and cannot span a

line-break, except that a quoted

string once started may span line breaks, thus extending a

command over two or more lines.

In command descriptions:

- When an argument is decorated with the tuple character

@ , as in

-somedashcommand @tuple this signifies that a

tuple name is expected.

- When an argument is decorated with both the tuple character

and the stack-pop indicator

~ , as in -somedashcommand ~@tuple

this signifies that either a tuple name or the stack-pop

indicator (popping an appropriate tuple previously pushed to

the stack) is expected.

- When an argument is decorated with the tuple character, the

stack-pop indicator

~

and wrapped in curly braces, as in -somedashcommand

~@{some string} , it signifies that the string argument

may come from a named tuple, or a tuple pushed previously to

the stack, or from an inline quoted string.

- In any position where a quoted string is one of the allowed

argument types, a simple undecorated inline lex ("plainword", no whitespace) is treated as a

quoted string.

- When the string in the description of the argument to a

dash-command consists of alternatives separated by the .OR. bar

(

| ) these are alternative values, usually

literal, for the argument.

- An example is the description of the

dpoint command's dash-command -type

~@{int|long|float} which means that

-type expects an argument, either from a tuple

or from a quoted string or plainword that is the literal string

either int, long, or

float.

Command

Example

An example of a command with dash-commands and arguments is

the following:

job -job @j -to @subsys -get subsystem

job is the command.

-job @j is the job command's

dash-command for providing the command with an already

instanced tuple variable representing the server job the

command is to operate upon.-

-to @subsys is the job command's

dash-command indicating the data

sink (in this case, a tuple) to which the output of the

job command is to go. Most commands know the

-to datasink dash-command

-get subsystem is the job

command's dash-command with single plainword argument

indicating what aspect of the job represented by

@j we wish to examine.

Note that the above example could equally have

been written:

job -- @j -to @subsys -get subsystem

using the eponymous

dash-command instead of -job.

Datasinks

Command descriptions reference datasinks. A datasink is

a data source or a data destination.

Many commands offer the dash-command

-to which directs the output of the command (often

an object) to the specified datasink. Some commands offer the

-from dash-command which assigns a source datasink

for input during the command, e.g., include which reads and interprets

source code can have its input from a file or variable.

A datasink is currently one of these types:

- Standard input and output

- Error output

- File

-

Tuple variable

- Tuple stack (pushing and popping named or anonymous

tuples)

- Null output (discard all data directed to this

datasink).

A datasink's type is recognizable from its name.

STD: represents standard input and output and

is the default destination datasink if none is explicitly

provided via the -to

dash-command.

ERR: is the standard error output stream.NULL: discards output.- A file can be any filename, relative or fully qualified

pathname.

- File names are recognized in datasink assignment simply

by their not matching one of the other name patterns for a

datasink.

- A named tuple variable is distinguished by starting with

@ as in @ThisIsAVar .

- The tuple variable thus named is created if it did not

previously exist.

- The tuple stack as a datasink is denoted by the tilde

character

~

In the absence of the -to dash-command, the

default destination datasink of a commands is STD:

(standard out).

When a command results in an object other than a string and

the command's destination datasink is File or Standard or Error

output, Ublu intelligently renders the object as a string.

If the object is of a class which Ublu does not recognize, the

object's toString() method is called to provide the

data.

System,

Userid, Password and -as400

In order to access the iSeries (AS400) server, many commands

routinely require in their argument string the following three

items:

- system (name or IP address)

- userid

- password

All such commands allow these three arguments to be omitted if

instead the -as400 dash-command is used to supply an

extant server instance to the command. See the as400 command to learn how create a

server instance to be used and re-used.

Of course, the execution of commands that require extended

ownership, access control or privilege level on the target system

can only be executed via an account with such privileges.

Deprecation of providing

system/userid/password as arguments to most commands

Note: Many of the oldest Ublu commands allow

system/userid/password to be supplied as main command arguments

as well as allowing the user to provide an as400 object via the

-as400 dash-command. The older style of command is

deprecated and all code should use the

-as400 style of providing an object created by the as400 command rather than

providing credentials as arguments to most commands.

Commands by Category

List of

commands

Here is the list of commands Ublu understands and their

descriptions. Some descriptions below start with a leading-slash

(/) and a number, indicating the number of arguments expected

(excluding dash-commands). The

slash/numbers are not part of the command syntax, and

only serve as documentation: do not enter them yourself. A

plus-sign (+) after the number indicates the number represents a

minimum number of arguments rather than an absolute number.

Optional dash-commands are indicated in [square brackets]. Again,

the brackets are documentation, and not part of the actual

syntax, just enter the dash-command and its argument(s), if

any.

Command

summary

Most commands provide a brief summary via help

-cmd commandname. That summary is repeated in the

command descriptions below. The (neither rigorous nor always

entirely accurate) schematic meaning of the summary is as

follows:

commandname /numargs[?] [[-dash-command

[arg arg ...]] [-dash-command [arg arg ...]]

...] [[-mutually-exclusive-dash-command [arg arg

...]] | [mutually-exclusive-dash-command

[arg arg ...]] ...] argument argument [optional-argument]

... : description of command's action

commandname is the name used by the

interpreter for the command-

/numargs[?] is count of how many arguments are

nominally expected by the command.

- an optional question-mark ? is appended if this

number commonly varies.

- a typical variation in number is the use of the

-as400

dash-command to obviate repeated use of the

system userid and password

arguments.

-dash-command arg represents a mandatory

dash-command and its argument-

[-dash-command [arg arg ...]] represents an

optional dash-command with

optional dash-command arguments.

[[-dash-command [arg arg ...]]

[-dash-command [arg arg ...]] ...] represents

several dash-commands, each of which may possess

arguments.[-mutually-exclusive-dash-command [arg arg ...]] |

[mutually-exclusive-dash-command

[arg arg ...]] ...] represents mutually

exclusive dash-commands which may not be used together.argument argument [optional-argument] ...

represents the formal arguments to the command.description of command's action is just

that.

If the first dash-command documented for the command is the

-as400 dash-command, then by use of the as400 command to store a system

instance in a tuple and the subsequent use of the

-as400 dash-command, such a command can omit the

three (3) arguments system userid password leaving the

rest of the formal argument list the same as it was.

as400

/3? [-to @var] [--,-as400,-from ~@var] [-usessl] [-ssl

~@tf] [-nodefault] [-new,-instance | -alive | -alivesvc

~@{[CENTRAL|COMMAND|DATABASE|DATAQUEUE|FILE|PRINT|RECORDACCESS|SIGNON]}

| -connectsvc

~@{[CENTRAL|COMMAND|DATABASE|DATAQUEUE|FILE|PRINT|RECORDACCESS|SIGNON]}

| -connectedsvc

~@{[CENTRAL|COMMAND|DATABASE|DATAQUEUE|FILE|PRINT|RECORDACCESS|SIGNON]}

| -connected | -disconnect | -disconnectsvc

~@{[CENTRAL|COMMAND|DATABASE|DATAQUEUE|FILE|PRINT|RECORDACCESS|SIGNON]}

| -ping sysname

~@{[ALL|CENTRAL|COMMAND|DATABASE|DATAQUEUE|FILE|PRINT|RECORDACCESS|SIGNON]}

| -local | -validate | -qsvcport

~@{[CENTRAL|COMMAND|DATABASE|DATAQUEUE|FILE|PRINT|RECORDACCESS|SIGNON]}

| -svcport

~@{[CENTRAL|COMMAND|DATABASE|DATAQUEUE|FILE|PRINT|RECORDACCESS|SIGNON]}

~@portnum | -setaspgrp -@{aspgrp} ~@{curlib} ~@{liblist} |

-svcportdefault | -proxy ~@{server[:portnum]} | -sockets ~@tf |

-netsockets ~@tf | -vrm ] ~@{system} ~@{user} ~@{password} :

instance, connect to, query connection, or disconnect from an

as400 system

Creates an object instance representing an AS400 system and

manipulates that object. The instance is intended to be stored

in a tuple variable for later

use with the -as400

dash-command to many commands so

that the system, userid and password

need not be repeated with various commands.

Note on

SSL: Connection to the system can be encrypted via

SSL via the -usessl or -ssl dash-commands documented below.

- You will need to create a keystore and add any trusted

certificate to that keystore for any server using self-signed

certificates to which you wish to connect.

- I use

openssl s_client -connect

example.com to display the certificate and

copy-and-paste it to example.cer

- I add the certificate to my keystore with

keytool -import -alias EXAMPLE -file example.cer

-keystore /full/path/to/mykeystore

- You must launch Ublu with the appropriate Java property

pointing to the keystore, e.g

java

-Djavax.net.ssl.trustStore=/full/path/to/mykeystore -jar

/opt/ublu/ublu.jar arguments...

- NOTE that creating your own trust

store and making it the trust store for Ublu's Java VM

disconnects the VM from the default Java keystore, making

CA certs there inaccessible.

- If you need both various servers' self-signed certs

and the Java store of CA certs to help validate public

sites, add the default Java certs to the store of your

self-signed certs. E.g., here's what I did on my

installation:

cp ublutruststore ublutruststore.bak;

keytool -importkeystore -deststorepass

yyyyyyy -destkeystore ublutruststore

-srckeystore /usr/local/jdk/jre/lib/security/cacerts

-srcstorepass xxxxxxx

- This allows me to connect via SSL to various sites

featuring self-signed certs and also to IBM Bluemix for

the

watson command.

- The setup of the keystore is discussed in the IBM Support

document

Setup Instructions for Making Secure Sockets Layer (SSL)

Connections with the IBM Toolbox for Java.

When an as400 instance is no longer in use but

your program continues, it is often best to

-disconnect or -disconnectsvc.

An as400 instance stored in a tuple variable

@myas400 is passed to the as400

command using any one of the three dash-command forms:

- --

@myas400

-as400 @myas400-from @myas400

This is a bit redundant but the three forms persist for

historical reasons.

The operations of the dash-commands are as shown:

-new,-instance instances and puts the

as400 instance. This is the default operation.

-instance is deprecated, use

-new instead-

-nodefault at instancing time tells Ublu not

to set the service ports to defaults. This is necessary in

the following cases:

- your code is running native on IBM i and you wish the

code to use local calls instead of socket

connectivity

- your code is running native on IBM i and you wish to

cause the code to use Unix sockets by subsequently using

the dash-command

-sockets

- you wish to manually set the service ports (or use

the server's portmapper to find the service ports) via

the dash-command

-svcport

Otherwise, the as400 command automatically

sets the ports to their defaults, after which invocations

of -sockets and -netsockets will

cause an exception.

-usessl indicates connections will be made

using Secure Sockets Layer (SSL). This dash-command can only

be used at instance creation time (-new).-ssl ~@tf indicates connections will be made

using Secure Sockets Layer (SSL) if the value of the tuple

(or pop) ~@tf is true ... any other

value means "no SSL". This dash-command can only be used at

instance creation time (-new).-

-alive puts true if the as400

instance passed to the -as400 @var

dash-command is connected to any service

and that connection is alive, false otherwise.

- Note: on IBM i prior to 7.1

alive behaves the same as

-connected.

-

-alivesvc puts true if the

as400 instance is connected

(false otherwise) to the specified service,

one of

CENTRAL|COMMAND|DATABASE|DATAQUEUE|FILE|PRINT|RECORDACCESS|SIGNON

and that connection is alive.

- Note: on IBM i prior to 7.1

-alivesvc behaves the same as

-connectedsvc.

-

-connectsvc connects the as400

instance to the specified service, one of

CENTRAL|COMMAND|DATABASE|DATAQUEUE|FILE|PRINT|RECORDACCESS|SIGNON

- Note that it is not usually

necessary to explicitly connect to a service. Ublu

commands perform all necessary connections.

-connectedsvc puts true if the

as400 instance is connected (false

otherwise) to the specified service, one of

CENTRAL|COMMAND|DATABASE|DATAQUEUE|FILE|PRINT|RECORDACCESS|SIGNON-connected puts true if the

as400 instance passed to the -as400

@var dash-command is connected to

any service, false

otherwise.-disconnect disconnects all

services when used with the -as400 @var

dash-command where @var refers to an

as400 instance previously instanced.-disconnectsvc disconnects the

as400 instance from the specified service, one

of

CENTRAL|COMMAND|DATABASE|DATAQUEUE|FILE|PRINT|RECORDACCESS|SIGNON-svcport

~@{[CENTRAL|COMMAND|DATABASE|DATAQUEUE|FILE|PRINT|RECORDACCESS|SIGNON]}

~@portnum sets the TCP port for the specified service

for when the port used is not the default port. A value of

-1 for the port number means to use the TCP/IP

portmapper service to find the port.-setaspgrp resets the ASP group, the current

library and the library list.-svcportdefault resets all service ports to

their default values.-

-ping sysname service checks whether a

specified TCP service used by Ublu, one of

CENTRAL|COMMAND|DATABASE|DATAQUEUE|FILE|PRINT|RECORDACCESS|SIGNON,

or all such services (ALL) are running on the

host specified as sysname.

true is put if the service is available,

false otherwise.

-local puts true if Ublu is

running locally on an IBM i server.-proxy ~@{server[:portnum]} assigns the

JTOpen proxy server (a running instance of

com.ibm.as400.access.ProxyServer) if one is

being used.-qsvcport

~@{[CENTRAL|COMMAND|DATABASE|DATAQUEUE|FILE|PRINT|RECORDACCESS|SIGNON]}

puts the port on which the as400 object is

currently set contact the named service.-sockets ~@tf if ~@tf is

true requires the as400 instance to use socket

connections instead of APIs when running locally on an IBM i

server.-netsockets ~@tf if ~@tf is

true requires the as400 instance to use Internet

domain socket connections instead of Unix sockets when using

sockets locally on an IBM i server.-validate validates the signon for the

system+user+password combination represented by the

as400 object and puts

true or false accordingly.-

-vrm puts the Version/Release/Modification of

the as400 instance as a 24-bit hex number

(with leading zeroes, if any, not shown) of the form

xxyyzz where

xx is the versionyy is the releasezz is the modification

In the absence of dash-commands the default operation is

-instance.

When a signon fails for bad userid or incorrect password, a

signon handler is invoked. The JTOpen class library which

provides connection to the host has a builtin handler, which

opens a GUI window to prompt the user if it can and fails with

a mysterious error message if GUI capabilities are not present,

e.g., in an ssh session which does not pass XWindows thru.

There are also two custom handlers in Ublu, a custom handler

which prompts the user textually, and a null handler which

simply fails the login operation. This behavior can be

controlled on a per-interpreter basis via the props command:

props -set signon.handler.type

BUILTIN uses the JTOpen GUI-based handlerprops -set signon.handler.type CUSTOM uses

the Ublu text-based handlerprops -set signon.handler.type NULL uses the

Ublu fail-on-error handler

The default is CUSTOM ... users will be

prompted in text mode in case of a signon failure.

In the absence of the explicit switches -usessl

and -ssl, the as400 command will

create SSL (Secure Socket Layer) secure instances if the

correct property is set via the props

command prior to instancing.

props -set signon.security.type SSL means

as400 creates SSL-secured instancesprops -set signon.handler.type NONE means

as400 creates non-secured instances

The default is NONE.

Example

as400 -to @myas400 mysystem myuserid mypasswd # an

instance of the system is now stored in the tuple variable

@myas400

joblist -as400 @a # a

joblist is now fetched from mysystem on behalf of

myuserid.

joblist -as400 mysystem

myuserid mypasswd # fetches the joblist in the same fashion as

the previous command

as400 -- @myas400 -setaspgrp *CURUSR *SYSVAL *CURUSR #

sets to defaults, could be new values, liblist could be long

string

as400 -- @myas400 -disconnect

ask

/0 [-to datasink] [-from datasink] [-nocons] [-say

~@{prompt string}] : get input from user

ask prompts the user (if a prompt string is

provided) and puts the response from the user, who must press

[enter] after entering reponse text.

If Ublu is windowing, ask puts up a requester

dialog instead of prompting in the text area.

If the user simply presses [enter] or [OK] without inputing

any text, a zero-length string is put. In windowing mode, if

the user presses [Cancel], null is put.

If the -from dash-command is set, the prompt comes

from

- The first line of any file datasource

- The string value of a tuple referenced by

-from

If -nocons is set, Ublu will not attempt to

read the console but instead read the standard input. This has

no effect in windowing mode.

Example

> ask -say ${ What is funny?

}$

What is funny? :

elephants and giraffes and zoos

elephants and giraffes and zoos

> ask -to @answer -say ${ Do you have anything to say?

}$

Do you have anything to say? :

> string -len @answer

0

BREAK

/0 : exit from innermost enclosing DO|FOR|WHILE

block

Example

The following will fetch a joblist of active interactive jobs

and look for MARSHA as a user in the job list,

BREAKing when found.

as400 -to @as400 mysystem myuid

********

put ${ Looking for

MARSHA in a list of all active interactive jobs

}$

joblist -to @joblist -jobtype

INTERACTIVE -active -as400 @as400

FOR @j in @joblist $[

job -job @j -get user -to

@user

put -to

@marsha ${ MARSHA }$

test -to @match -eq @user

@marsha

IF

@match THEN $[

put -n ${ We found Marsha!

}$

job -job @j -info

BREAK

]$ ELSE

$[

put -n ${

nope }$

]$

]$

bye

terminates the current interpreter level immediately, ending

processing and discarding any following commands at that level.

It takes no arguments. At the top level, bye exits

Ublu. More cleanup is performed by leaving Ublu via

bye than with exit.

Example

> interpret

1>

interpret

2> bye foo bar

woof

1>

bye

>

bye

Goodbye!

$

CALL

/? ~@tuple ( [@parm] .. ) : Call a functor

The functor to be called was created by FUN and is stored in ~@tuple. When

the functor was defined, a list of parameter names was

provided. The substitution list of tuple names to substitute

for the parameter names when encountered in the functor's

execution block surrounded in

parentheses and separated by at least one space but all on the

same line follows.

Example

FUN -to @fun ( a b c ) $[ put -from @@a put -from @@b put

-from @@c ]$

put -from

@fun

ublu.util.Functor@5f40727a ( a b c ) $[ put -from @@a

put -from @@b put -from @@c ]$

put -to @aleph ${ this is a }$

put -to @beth ${ and here is b }$

put -to @cinzano ${ la dee dad }$

CALL @fun ( @aleph

@beth @cinzano )

this is a

and here is b

la dee dad

FUN -to @fun

( ) $[ put ${ zero param functor }$ ]$

CALL @fun ( )

zero param functor

put -from

@fun

ublu.util.Functor@67ba0609 ( ) $[ put ${ zero param

functor }$ ]$

See also defun FUN FUNC

calljava

/0 [-to @datasink] -forname ~@{classname} | -class

~@{classname} [-field ~@{fieldName} | -method ~@{methodname}

[-arg ~@argobj [-arg ..]] [-primarg ~@argobj [-primarg ..]]

[-castarg ~@argobj ~@{classname} [-castarg ..]] | -new

~@{classname} [-arg ~@argobj [-arg ..]] [-primarg ~@argobj

[-primarg ..]] [-castarg ~@argobj ~@{classname} [-castarg ..]]

| --,-obj ~@object [field ~@{fieldName} | -method

~@{methodname} [-arg ~@argobj [-arg ..]] [-primarg ~@argobj

[-primarg ..]] : call Java methods and fields

The calljava command invokes a method or

constructor in the underlying Java virtual machine or accesses

a class or object field. The object result is put to the

assigned datasink. If the method has a

void return type, nothing is put.

The dash-commands describe the

desired Java call or field. If -new appears, the

call is to a constructor.

-forname ~@{classname} puts a

Class instance of the specified class.-

--,-obj ~@object or -class

~@{classname} indicates the object or class upon

which a method call or field is to be invoked.

- One of either

--,-obj ~@object or

-class is necessary in a

-method call or -field

invocation.

- This element is omitted when either the

-new or -forname dash-command

is used.

-new ~@{classname} puts a new

object instance of the class indicated by the fully-decorated

classname (e.g., java.lang.String) for which a

constructor is to be called. Provide necessary arguments if

any via -arg and/or -primarg and/or

-castarg.-field ~@{fieldName} puts the named field

for the object or class specified. This

java.lang.reflect.Field instance can be used as

the -obj ~@object of later method calls.-

-method ~@{methodname} indicates the name of

the method to be invoked.

- This element is omitted when the

-new or

-field or -forname dash-command

is used.

-

-arg ~@argobj places an object and its class

signature in the array to be used in method invocation.

- Multiple arguments are indicated by multiple usage of

-arg in left-to-right order matching the

Java method specification.

-

-castarg ~@argobj ~@{classname} places an

object and an arbitrary class designation in the array to

be used in method invocation.

- This is necessary when a class signature must match

exactly. Ublu handles most method calls searching back up

the implements/extends chain, but

-new

expects an exact match, so a superclass or interface must

be called out in this fashion.

- Multiple arguments are indicated by multiple usage of

-castarg in left-to-right order matching the

Java method specification.

-

-primarg ~@argobj places an object and its

class signature converted to a primitive type in the array

to be used in method invocation.

- Primitive types are passed to a method call as their

wrappered types, e.g.

int is passed as

java.lang.Integer

-primarg allows

calljava to specify that the method

signature is for the primitive, not the wrapper

class.- To create numbers of specific Java types to use

with

-primarg to match method

signatures, use the num command.

- Multiple arguments are indicated by multiple usage of

-primarg in left-to-right order matching the

Java method specification.

Note: The Ublu-coded extensions to Ublu in

the extensions subdirectory provide many examples

of the use of calljava.

See also: num

Examples

> put -to @obj ${ this is a test

}$

> calljava -to @result -obj

@obj -method length

> put

-from @result

15

>

num -to @num -int 12

>

calljava -to @result -obj @obj -method substring -primarg

@num

> put -from

@result

st

The following example (examples/clHelp.ublu)

stacks parameters and builds a String array of

args in order to invoke the main(args []) method

of a utility class present in JTOpen.

FUNC clHelp ( lib cmd s u p ) $[

LOCAL @start LOCAL @end

num -to ~ -int 10

calljava -to ~ -forname java.lang.String

calljava -to @L[String -class java.lang.reflect.Array -method newInstance -arg ~ -primarg ~

string -to ~ -trim @@lib

string -to ~ -trim ${ -l }$

string -to ~ -trim @@cmd

string -to ~ -trim ${ -c }$

string -to ~ -trim @@s

string -to ~ -trim ${ -s }$

string -to ~ -trim @@u

string -to ~ -trim ${ -u }$

string -to ~ -trim @@p

string -to ~ -trim ${ -p }$

put -to @start 0

put -to @end 10

DO @start @end $[

calljava -class java.lang.reflect.Array -method set -arg @L[String -primarg @start -arg ~

]$

calljava -class com.ibm.as400.util.CommandHelpRetriever -method main -arg @L[String

]$

FUNC browseClHelp ( lib cmd s u p ) $[

LOCAL @filename

put -n -to ~ ${ file:// }$

~ -to ~ -trim

system -to ~ ${ pwd }$

\\ ${ system returns an ublu.util.SystemHelper.ProcessClosure object.

This calljava gets the output to concatenate to for the absolute URI. }$

calljava -to ~ -- ~ -method getOutput

lifo -swap

~ -to ~ -cat ~

~ -to ~ -cat /

~ -to ~ -cat @@lib

~ -to ~ -cat _

~ -to ~ -cat @@cmd

~ -to ~ -cat ${ .html }$

~ -to @filename -trim

put @filename

clHelp ( @@lib @@cmd @@s @@u @@p )

desktop -browse @filename

]$

/0 [-to datasink] [--,-cim @ciminstance] [-keys

~@propertyKeyArray] [-namespace ~@{namespace}] [-objectname

~@{objectname}] [-url ~@{https://server:port}] [-xmlschema

~@{xmlschemaname}] [-new | -close | -path | -cred ~@{user}

~@{password} | -init ~@cimobjectpath | -ei ~@cimobjectpath] :

CIM client

The cim command creates and employs

Common Information Model clients. To do so it uses the

SBLIM

JSR-48 CimClient library available under the Eclipse

Public License.

(See also cimi)

To use the CIM client:

- Create an instance.

- Assign credentials to the instance.

- Create a CIM path.

- Use the path to initialize the instance.

- Create a path to the CIM object to be accessed.

- Use the path an argument of the desired operation

This is illustrated as follows:

Example

(examples/test/cimtest.ublu)

include /opt/ublu/extensions/ux.cim.property.ublu

FUNC cimtest ( url uid passwd namespc ) $[

LOCAL @client LOCAL @path

cim -to @client

@client -cred @@uid @@passwd

cim -to @path -url @@url -path

@client -init @path

cim -to @path -namespace @@namespc -objectname CIM_LogicalIdentity -path

put -n -s ${ Enumerate Instances for }$ put @path

@client -ei @path

string -to ~ -new

cim -to @path -namespace @@namespc -objectname ~ -path

put -n -s ${ Enumerate Classes for }$ put @path

@client -ec @path @true

cim -to @path -namespace @@namespc -objectname IBMOS400_NetworkPort -path

put -n -s ${ Get Instances for }$ put @path

@client -to @instances -ei @path

FOR @i in @instances $[

put -n -s ${ (( Instance looks like this )) }$ put -from @i

@client -to ~ -gi @i @false @false

lifo -dup lifo -dup lifo -dup

put ~

put ${ *** Putting path for instance *** }$

~ -path

put ${ *** Putting keys for instance *** }$

~ -keys

put ${ *** Putting properties for instance *** }$

~ -to ~ -properties

lifo -dup put ~

FOR @i in ~ $[

put -n -s ${ ***** property is }$ put @i

ux.cim.property.getName ( @i )

put -n -s ${ ***** property name is }$ put ~

ux.cim.property.getValue ( @i )

put -n -s ${ ***** property value is }$ put ~

ux.cim.property.hashCode ( @i )

put -n -s ${ ***** property hashcode is }$ put ~

ux.cim.property.isKey ( @i )

put -n -s ${ ***** property is key? }$ put ~

ux.cim.property.isPropagated ( @i )

put -n -s ${ ***** property is propagated? }$ put ~

]$

]$

@client -close

]$

cimi

/0 [-to datasink] [--,-cimi @ciminstance] [-class |

-classname | -hashcode | -keys | -key ~@{keyname} | -properties

| -propint ~@{intindex} | -propname ~@{name} | -path] :

manipulate CIM Instances

The cimi command manipulates CIM instances

returned by the cim client. CIM

instances are autonomic or can be provided

to the cimi command via --,-cimi

@ciminstance.

The dash-commands are as

follows:

-class puts the class of the instance.-classname puts the classname of the

instance.-hashcode puts the hashcode of the

instance.-keys puts a collection of keys possessed by

the instance.-key ~@{keyname} puts the key named

keyname for the instance.-path puts the path of the instance.-properties puts a collection of properties

possessed by the instance.-propint ~@{intindex} puts the property at

index.-propname ~@{name} puts the property with

name name.

Note: There are extensions to Ublu CIM

support in the file(s)

extensions/ux.cim.*.ublu

See also: cim

collection

/0? [--,-collection ~@collection] [-to datasink] [-show |

-size] : manipulate collections of objects

The collection command provides support for Java

collections. The collection (created elsewhere) is provided to

the command in a tuple argument to the -- or

-collection dash-command.

The operations are as follows:

-show puts a string representation of the

collection.-size puts the integer size of the

collection.

commandcall

/4? [-as400 ~@as400] [-to datasink] ~@{system}

~@{userid} ~@{passwd} ~@{commandstring} : execute a CL

command

executes a CL command represented by commandstring on

IBM i system system on behalf of userid with

password. The commandstring is a quoted

string representing the entire CL command and its arguments. If

the -as400 dash-command is provided, then the

arguments system userid and password

must be omitted.

If the command results in one or more AS400 Messages, the

Message List is put.

Note that CL display and menu commands do not work! Also,

you may have to juggle shell quoting to issue such command

lines; it's definitely easier to enter this sort of thing in

interpretive mode or as an Ublu program or function.

Example

commandcall -to foo.txt somehost bluto ******** ${

SNDMSG MSG('Hello my friend') TOUSR(fjdkls) }$

# Performs a SNDMSG on SOMEHOST on behalf of user bluto

sending the text "Hello my friend" to a user FJDKLS. Any output

returned by the command is redirected to a local file

foo.txt.

as400 -to @myas400

somehost bluto ******** # creates an as400 instance and stores

it in the tuple

variable @myas400

commandcall -as400 @myas400 -to foo.txt ${ SNDMSG MSG('Hello my

friend') TOUSR(fjdkls) }$

# Performs a SNDMSG just as above using the as400 instance in

place of a repetition of credentials.

const

/2? [-to datasink] [-list | -create | -clear |

-defined ~@{*constname} | -drop ~@{*constname} | -save |

-restore | -merge ] ~@{*constname} ~@{value} : create a

constant value

The const command:

- creates a named constant with a string value

- drops a named constant from the map of named

constants

- queries the existence of a named constant

- displays the map of named constants

- clears the map of named constants

The default action is -create.

The name of the constant must start with an asterisk